If you’ve already rolled out the basic sensitivity labels in Microsoft Purview, you’ve made a solid start toward securing your Copilot deployment. These labels help control who can access content, how it’s shared, and what happens when it leaves your environment. But there’s another layer of protection that often goes overlooked, and it’s one that directly affects how Copilot interacts with your content.

You might not want certain files to become part of Copilot’s “grounding” process, where it pulls context from data to generate responses. Whether it’s draft financial results, confidential strategy notes, or a raw dataset that shouldn’t influence output, some files should simply stay off Copilot’s radar. The good news is, you don’t need to remove access entirely or move these files to a separate location. You just need to adjust the EXTRACT permission in your sensitivity labels.

This post walks through what the EXTRACT setting does, how to configure it in the Microsoft Purview portal, and what the user experience looks like when this label is applied.

Why basic sensitivity labels aren’t always enough

Let’s say you have a document that multiple team members need to read. Maybe it’s a quarterly revenue breakdown or an internal performance analysis. It’s not top secret, and people are allowed to access it, but you don’t want Copilot to use this file to summarise trends, compare results, or build new presentations.

This scenario is more common than you’d think. With Copilot becoming more deeply embedded in Microsoft 365 apps, it’s important to think beyond user permissions and consider Copilot interaction permissions. You may trust your users with the file, but that doesn’t mean you want it to shape the content Copilot produces.

That’s where advanced sensitivity label settings come into play.

Introducing the EXTRACT permission

The EXTRACT permission is part of the rights management framework used in Microsoft Purview. When this permission is enabled, Copilot and other AI services can use the file as input for grounding. That means Copilot can read the content, interpret its meaning, and use it to inform answers or generate new content.

When EXTRACT is disabled, Copilot is effectively blind to the file. The file still exists. Users can still open it and work with Copilot within the file. But Copilot can’t read it behind the scenes or include it in its reasoning process.

It’s a subtle but powerful setting. And it’s one that gives you precise control over what Copilot knows.

Step-by-step: Creating a label that blocks Copilot access

To configure this, head over to the Microsoft Purview compliance portal and follow these steps:

- Go to Information Protection and choose Labels.

- Either create a new label or add a sublabel under an existing parent label if you want to group permissions by category.

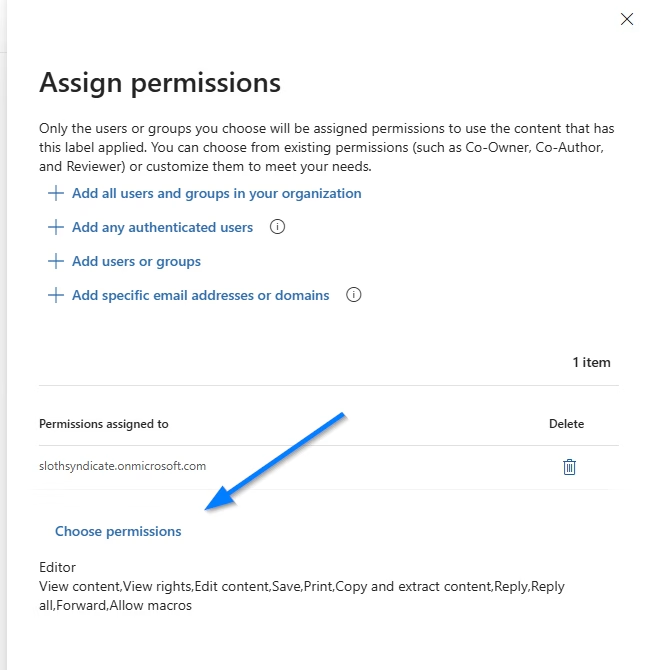

- In the Control Access step, select the users that should be able to access the file. For our scenario, we select all users.

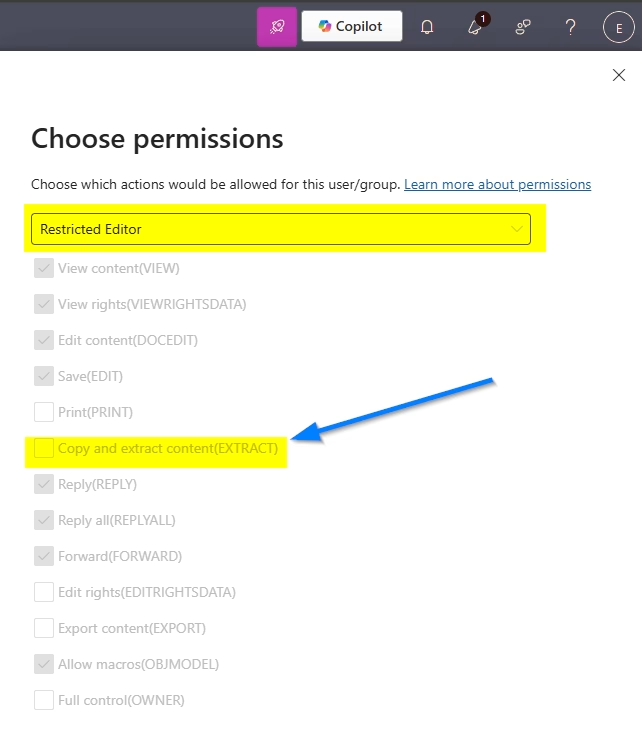

- Under Choose permissions, create your own custom permissions or pick a template. For our scenario, we’ll give users restricted editor permission, as that contains the disabled EXTRACT permission.

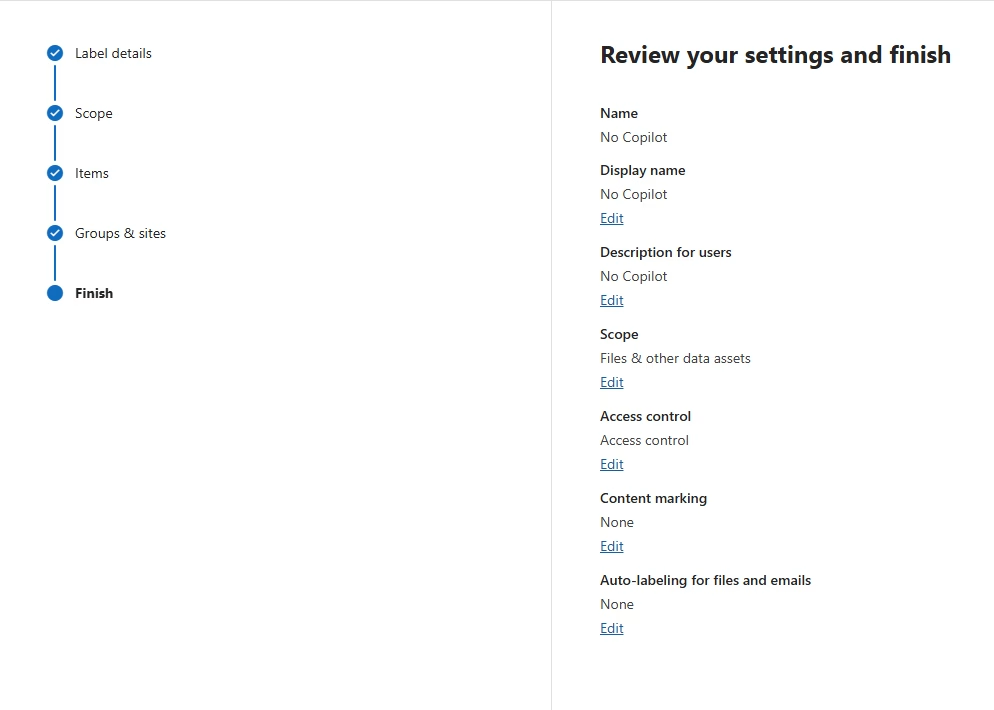

- Finish configuring the label and publish it to the users or groups who might work with these files. You can publish labels broadly or use scoped policies to target specific departments or security groups.

Keep in mind it may take up to 24 hours for the label to be fully visible and functional within your tenant, especially in large environments.

Testing the result with Copilot

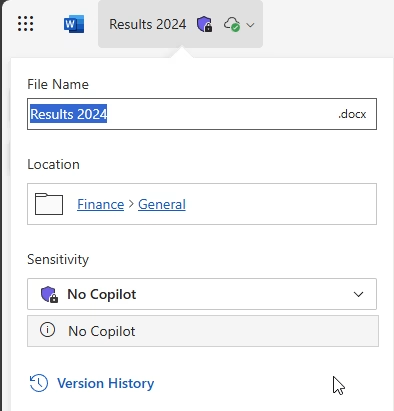

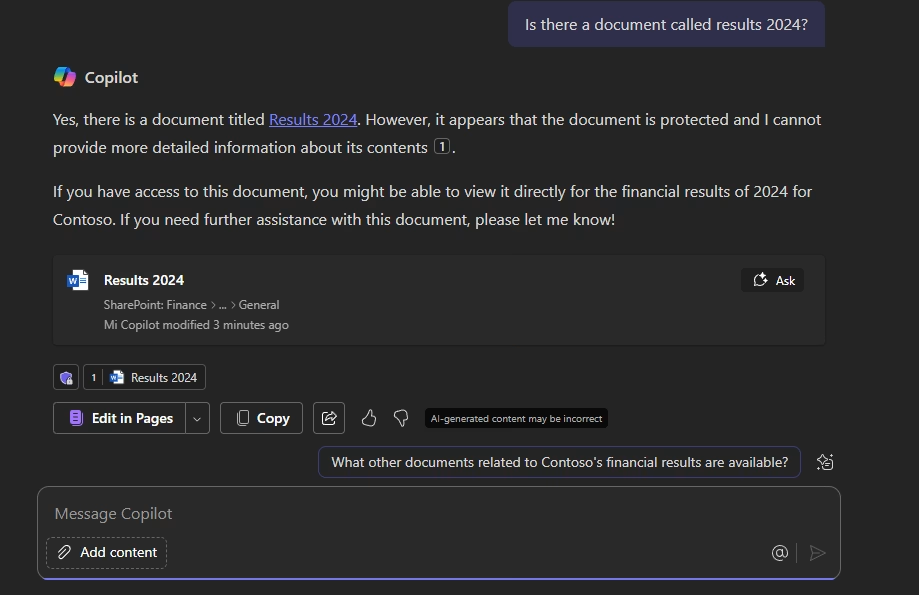

Now let’s apply the label to a test document. I’ve got a file called Results 2024.docx, stored in a SharePoint site used by the Finance team. It contains the year-end results for Contoso, and we want to prevent Copilot from referencing or using it.

With the sensitivity label applied and EXTRACT disabled:

- If I ask Copilot “What are the 2024 results for Contoso?”, it won’t reference this file in its answer.

- If I try to generate a presentation or Word document based on it, Copilot won’t be able to use it as a source.

- If I open the file manually and start writing or editing, I can still use in-app Copilot features like rewrite, summarise, or adjust tone. These features are based on what’s visible in the editor, not the file’s extractability.

This setup gives you the best of both worlds: people can work with sensitive files as needed, but AI services are kept at a safe distance.

Real-world use cases

There are several practical situations where disabling EXTRACT makes sense:

- HR documents: Internal evaluations, exit interview notes, or workforce planning files.

- Finance data: Early drafts, forecasts, or sensitive internal metrics.

- Product roadmaps: Strategy slides or notes that haven’t been approved for wider distribution.

- Legal documents: Contracts, NDAs, and negotiation drafts.

You can even use sublabels to define multiple levels of control. For example, a parent label might cover “Confidential – Internal Use”, while sublabels define whether AI tools are allowed or not.

Copilot is powerful. Your labels should be too.

Sensitivity labels are more than a compliance tool. They’re a governance framework that lets you control how people and systems interact with your data. By configuring the EXTRACT permission carefully, you ensure that Copilot is only drawing insights from the content that is meant to inform AI-generated responses.

As organisations become more AI-enabled, it’s crucial to balance innovation with control. Microsoft 365 gives you the tools to do both. You just need to know where to look, and how to configure them to fit your data strategy.

If you’ve already labelled your data for access, it’s time to go one step further. Give Copilot the green light on the right content, and a firm “not this one” on the rest.