AI like Microsoft 365 Copilot transforms how employees work, but it also introduces new risks if sensitive information is not properly secured. Microsoft Purview now includes Data Security Posture Management (DSPM) for AI, giving IT admins and decision-makers the tools to prepare user devices for safe AI usage. In this post, we will explain the essentials for setting up DSPM for AI, onboarding devices, configuring DLP and Insider Risk policies, and using the Purview browser extension to support secure AI compliance.

What is DSPM for AI

Data Security Posture Management for AI in Purview gives visibility into how AI tools like Copilot interact with organizational data. It identifies overshared content, offers one-click protection policies, and monitors AI activity across Microsoft and third-party apps. DSPM enables organizations to manage data risks proactively rather than reacting after data exposure occurs. This is critical when AI models can inadvertently surface or process sensitive data.

Preparing Devices: Key Prerequisites

To monitor and control AI-related activities on user devices, the following steps are required:

- Licensing: Users must have Microsoft 365 E5 Compliance licensing and Copilot licenses assigned.

- Auditing: Microsoft Purview Audit must be enabled to capture Copilot interactions.

- Device Onboarding: Devices must be enrolled in Microsoft Purview (or Defender) Endpoint DLP. Use Intune, Group Policy, or Defender onboarding packages.

- Browser Extension Deployment: Install the Microsoft Purview Compliance Extension on Chrome and Edge browsers. This enables monitoring and enforcing DLP on web-based AI interactions.

Without these in place, Purview cannot monitor AI usage effectively.

Setting Up Data Loss Prevention (DLP) Policies for AI

Data Loss Prevention in Purview is key to controlling sensitive data exposure through AI. Two DLP areas need to be addressed:

- Copilot Protection: Create DLP policies targeting the Microsoft 365 Copilot location. Block Copilot from processing documents with specific sensitivity labels or sensitive information types. This prevents Copilot from using confidential data in AI responses. You can read more about this policy in my previous blog.

- Third-party AI Monitoring: Configure Endpoint DLP rules to prevent copying or uploading sensitive data to third-party AI sites like ChatGPT, Claude, Gemini. Microsoft maintains a list of recognized AI domains. Deploy rules to either block or warn users when sensitive data is detected heading toward these sites.

In the DSPM for AI solution you can see if you have your policies in place already.

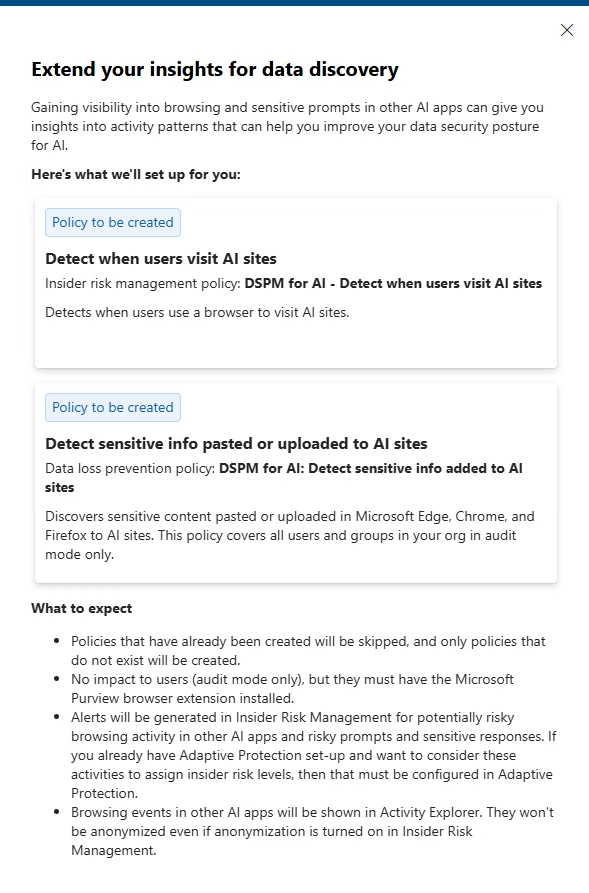

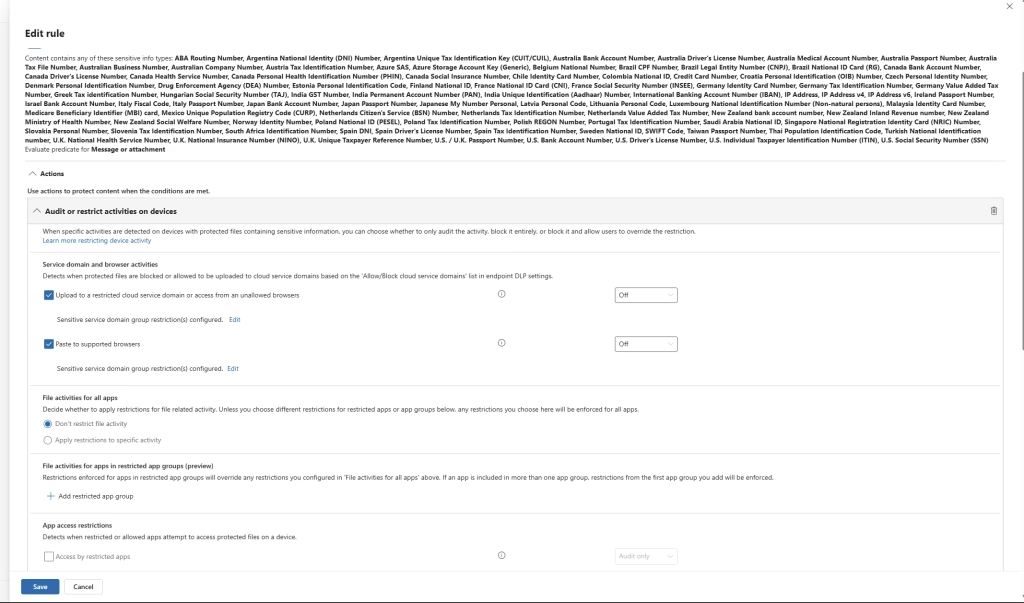

You can choose to have these policies set up automatically. The DLP policy looks like this after it’s been created for you:

Enabling Insider Risk Management (IRM) for AI

DLP blocks individual risky actions, but Insider Risk Management detects broader risky patterns. Microsoft Purview IRM now includes a “Risky AI usage” policy template. It identifies users creating risky AI prompts, uploading sensitive data to AI sites, or repeatedly accessing AI services against policy.

Deploy the Risky AI Usage policy and scope it to high-risk users initially. Ensure that browser extension deployment is complete because signals from web usage are essential for IRM AI detection. Investigate alerts to identify users needing intervention, training, or other response.

This is what the Insider Risk Management policy looks like after it’s been set up:

Deploying the Purview Browser Extension

The Microsoft Purview browser extension is critical for visibility into AI site usage and enforcing Endpoint DLP policies. Deploy it using Intune or Group Policy to Chrome and Edge. It ensures that web uploads, text pastes, and risky website visits are detectable and enforceable by DLP and Insider Risk policies.

Without the extension, AI-related activities in browsers may bypass monitoring. Ensure the extension cannot be removed by users to maintain compliance coverage.

Quick checklist as summary:

Licensing and Audit Readiness

- Assign Microsoft 365 E5 Compliance and Copilot licenses to users

- Ensure Microsoft Purview Auditing is enabled

Device and Browser Preparation

- Onboard user devices into Microsoft Purview Endpoint DLP

- Deploy the Microsoft Purview Compliance Extension for Chrome and Edge

- Prevent users from removing the browser extension

Data Protection Setup

- Create DLP policies targeting the Microsoft 365 Copilot location to block sensitive content from AI processing

- Configure Endpoint DLP rules to monitor and block data uploads to third-party AI sites

- Start DLP policies in audit mode, then move to enforcement after validation

User Risk Monitoring

- Deploy the “Risky AI usage” Insider Risk Management policy

- Scope initial policies to high-risk users or departments

- Investigate and respond to Insider Risk alerts related to AI usage

Final Validation

- Confirm all user devices are reporting AI usage telemetry

- Review Purview DSPM for AI dashboards regularly

- Communicate AI data protection policies to users where required

Time to get started!

Preparing user devices for AI is not optional. To secure AI usage safely, organizations must:

- Onboard devices into Microsoft Purview

- Enable auditing and deploy the Purview browser extension

- Configure DLP policies for both Copilot and external AI sites

- Monitor behavioral patterns with Insider Risk Management

With these steps in place, IT admins can enable productivity through Microsoft 365 Copilot while protecting sensitive data against AI-related risks. A safe, compliant AI environment starts with strong device and policy foundations.

This was the last blog in the series of “Getting ready for Microsoft 365 Copilot”. You can find the previvous parts here:

- https://slothpointonline.net/copilot/are-you-actually-ready-for-microsoft-365-copilot-heres-what-you-need-in-place/

- https://slothpointonline.net/sharepoint-online/your-content-is-a-mess-and-its-about-to-confuse-microsoft-365-copilot/

- https://slothpointonline.net/sharepoint-online/detecting-and-preventing-oversharing-in-microsoft-365/

- https://slothpointonline.net/purview/whats-stopping-copilot-from-exposing-the-wrong-data-sensitivity-labels-are-your-first-line-of-defense/

- https://slothpointonline.net/purview/advanced-sensitivity-labels-how-to-block-copilot-from-using-certain-files/

- https://slothpointonline.net/purview/data-loss-prevention-policies-for-m365-copilot-the-extra-protection-you-want/